Kadim website

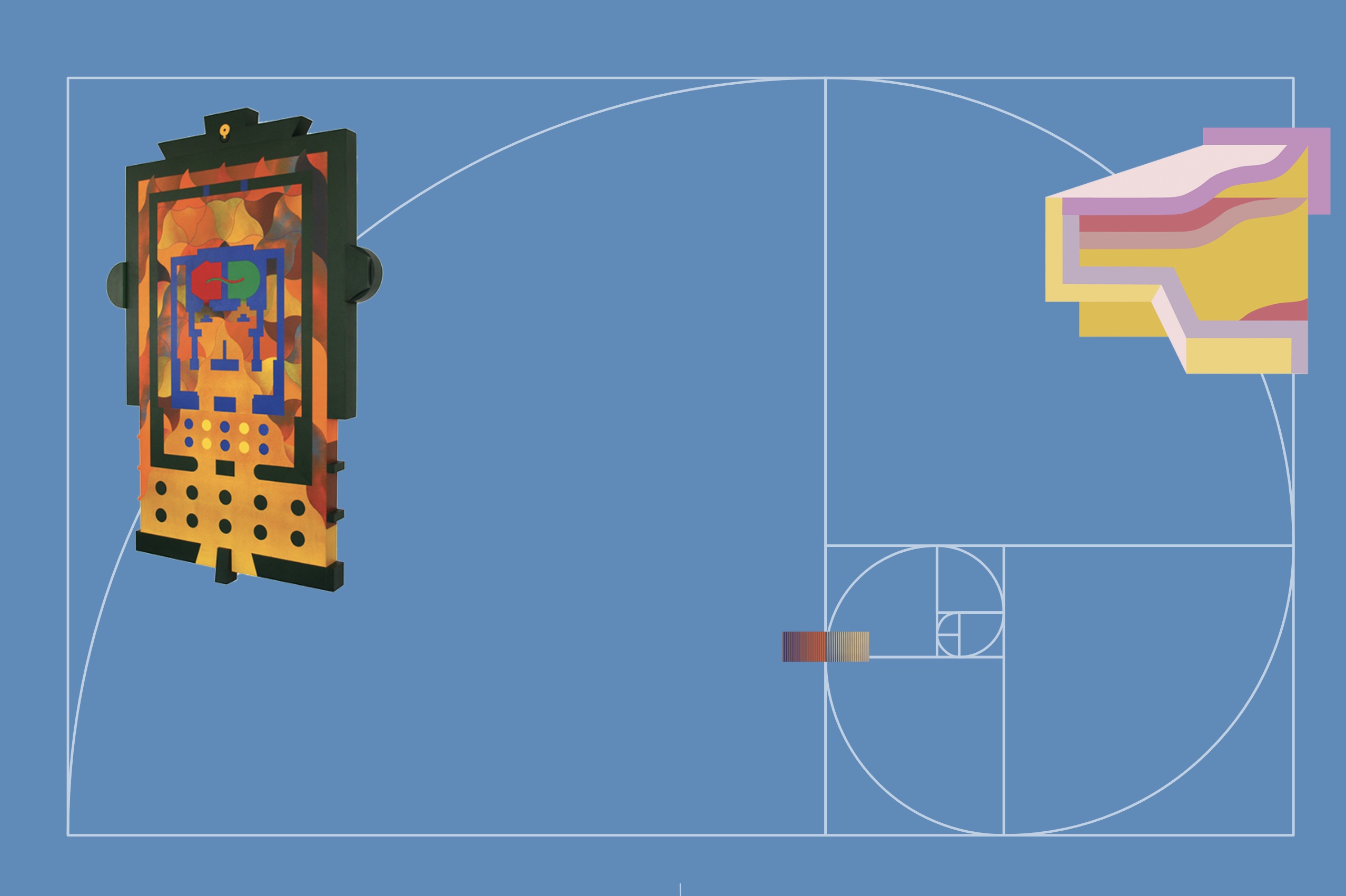

I programmed the animations for Reuven Berman Kadim website

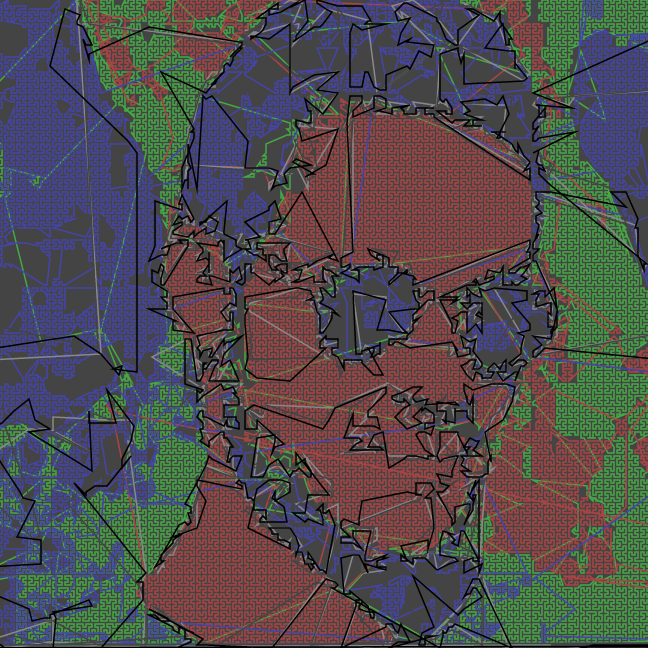

I also made a homage for one of his works

and this is my website on the world wide web

I made some prints, played some chords

I solve problems for a living, probably using code;

I took some pictures, made some gifs,

The things I make are mostly related to:

android arduino camera sensors sonification servo video installation hackathon kinect photography code vision gifs hardware electronics fun 3d movement processing music

interaction photography code vision gifs hardware electronics fun 3d movement processing music android interaction photography code vision gifs hardware electronics fun 3d movement processing arduino camera sensors sonification servo video installation

video installation hackathon kinect interaction photography code vision gifs photography code vision gifs hardware electronics fun 3d movement processing music electronics fun 3d

movement processing music android arduino camera sensors sonification servo video installation hackathon kinect interaction photography code vision gifs hardware movement processing music android arduino camera sensors sonification servo

code vision gifs hardware electronics fun 3d movement processing music android arduino camera sensors sonification servo video installation hackathon kinstallation hackathon kinect interaction photography code vision gifs hardware inect interaction

I made this website :)

I programmed animations for Reuven Berman Kadim

I made a Image Filter for Canva

I got an Honoraria grant to build a Huge Chameleon Sculpture for Burning-Man

I wrote a compiler in 3 days & 50 lines of code

I built an Eye-control interface for people with ALS

I developed Demos and Mockups for natural gesture TV interfaces

I developed Smart-Camera Android App for Optimizing Image Composition

I built an Experiment to analyze people behavior with AI

I made an on/off switch using pants zipper

I made some prints using various techniques

I helped this person build a Camera Focus Controller

I helped this dude build an Gesture Controlled Orchestra

I helped this guy build an Accordion Controller

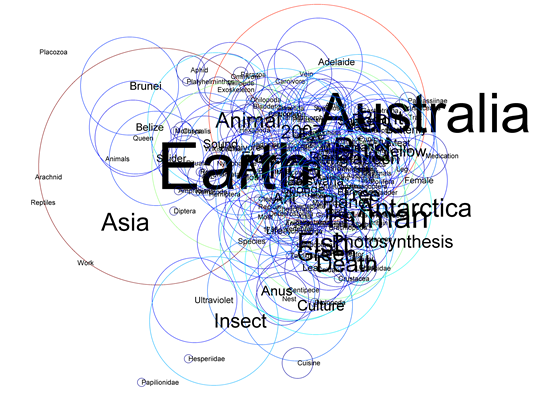

I made a Visualisation of Wikipedia articles

I developed and taught a Workshop for making motion-games using Depth Camera and Unity

I taught a Workshop for making motion-games using Depth Camera and Unity

I am currently self-employed doing freelance work

I programmed the animations for Reuven Berman Kadim website

I also made a homage for one of his works

I made a typewriter art Image Filter for Canva

I got an Honoraria grant to build a Huge Sculpture for Burning Man 2018

The sculpture received an Honoraria grant and was built by a collective of artists, dreamers, engineers and various of people from the local burner community.

It is 20 Meter long and 7 meters high. It is covered with 20,000 LEDs that sit on a crafted steel-frame. It offers a delightful, colorful animations full of light, that reacts and respond to its surroundings.

I wrote a compiler in 3 days & 50 lines of code

I built an Eye-control interface for people with ALS

Using a live video stream from a camera located on glasses frame, pointed directly towards user's eye. The video is processed and analyzed using computer vision algorithm to track the eye movements and translates them to keystrokes (up/down/left/right)inspired by the eyeWriter project, and uses same low-cost hardware (webcam on plastic glasses). What makes EyeNav different is that unlike direct, mouse-like cursor manipulation, which can be tiresome - it is based on discrete gestures (like keyboard), for navigation. The project was conceived, born, and took its first steps in 12 hours, during a Hackathon for development of apps for disabled people

I helped this guy build a remote controller to focus for DSLR camera

Read the tutorial

Read the tutorial

I helped this genius friend build the Home-Appliances Electronic Orchestra

Orchestra Der Elektrogeräte from Avishai Lapid on Vimeo.

I helped this dude with a concept for interactive ad for a Falafel Shop

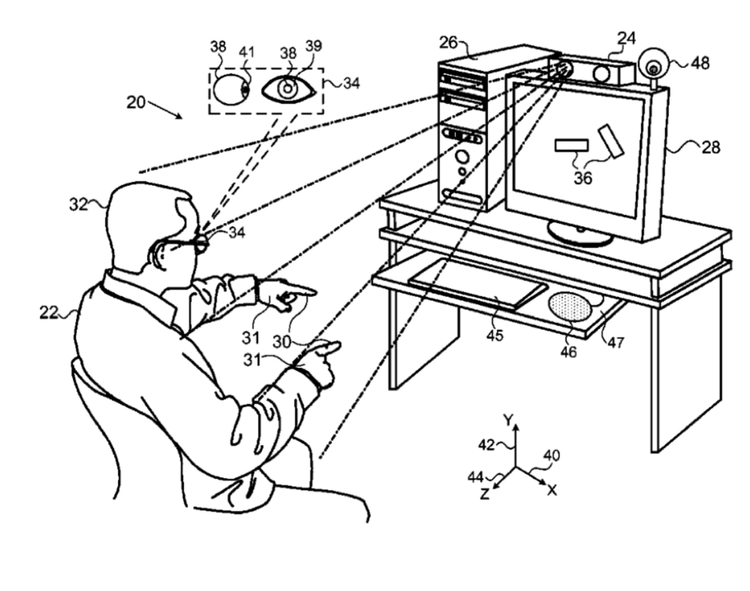

I developed Demos and Mockups for natural gesture TV interfaces at Primesense

Reach is Primesense's TV control paradigm, that demonstrates a concept of direct media access, using natural

gestures. Developed at Primesense UX Group.

Reach is Primesense's TV control paradigm, that demonstrates a concept of direct media access, using natural

gestures. Developed at Primesense UX Group.

I developed "Smart-Camera" Android App for Optimizing Image Composition

Recent years have seen dramatic progress both in the hardware and software driving digital photograpy. We now can utilize the processing power of smartphones to create stunning visual effects. Alas, almost no effort was made to improve the _composition_ of the images - while shooting the photo. This work addresses the challenge by combining computer-vision and photography-theory to suggest an automatic 'ViewFinder'.

I built an interactive, typographic, animated installation for Oded Ezer

‘We Are Family’ is an interactive, typographic-animated-gif installation that make use of fragments from found online political, brutal, pornographic and other videos to create a new kind of typographic visual language. Try it yourself at project page Read more at Oded Ezer websiteI made an on/off switch using pants zipper

A proof of concept for using a zipper as an on/off switch:

The switch triggers a tactile feedback through servo hidden in the belt,

to send the wearer a silent and kindly reminder - if the zipper is left open for too long.

A proof of concept for using a zipper as an on/off switch:

The switch triggers a tactile feedback through servo hidden in the belt,

to send the wearer a silent and kindly reminder - if the zipper is left open for too long.

I made some prints in an alternative printing process workshop

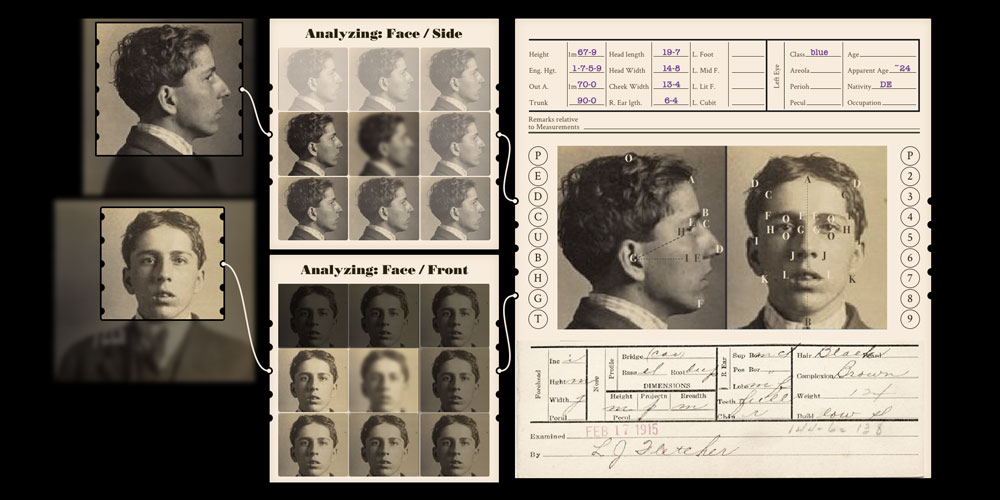

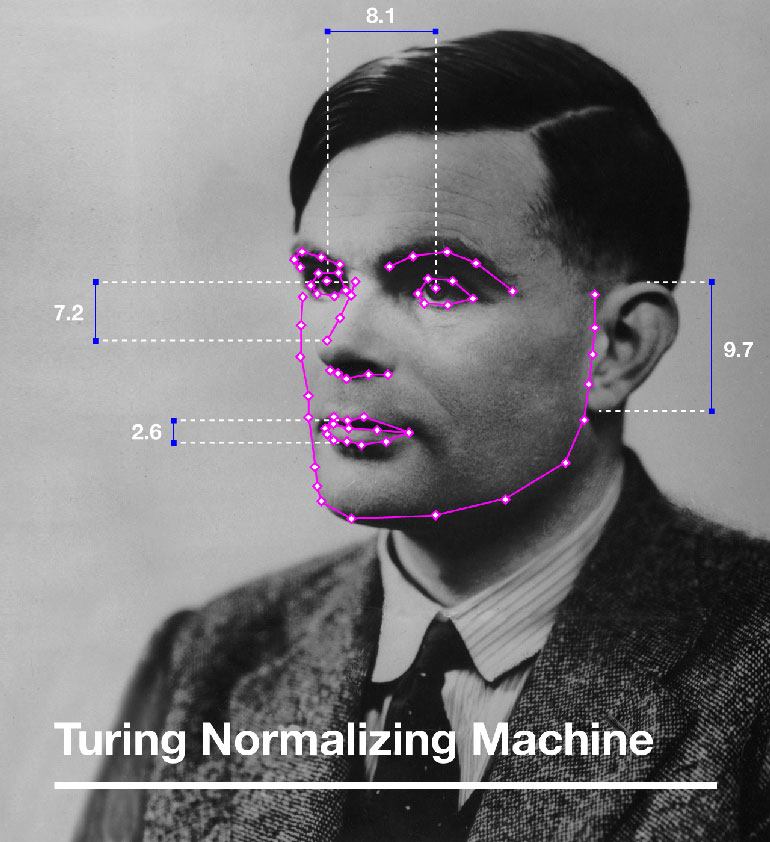

I made an experiment in Machine Learning & Algorithmic Prejudice with Mushon Zer-aviv

Part of several exhibitions shown in Israel, Athens and Kiev

Ars Electronica Honorary Mention

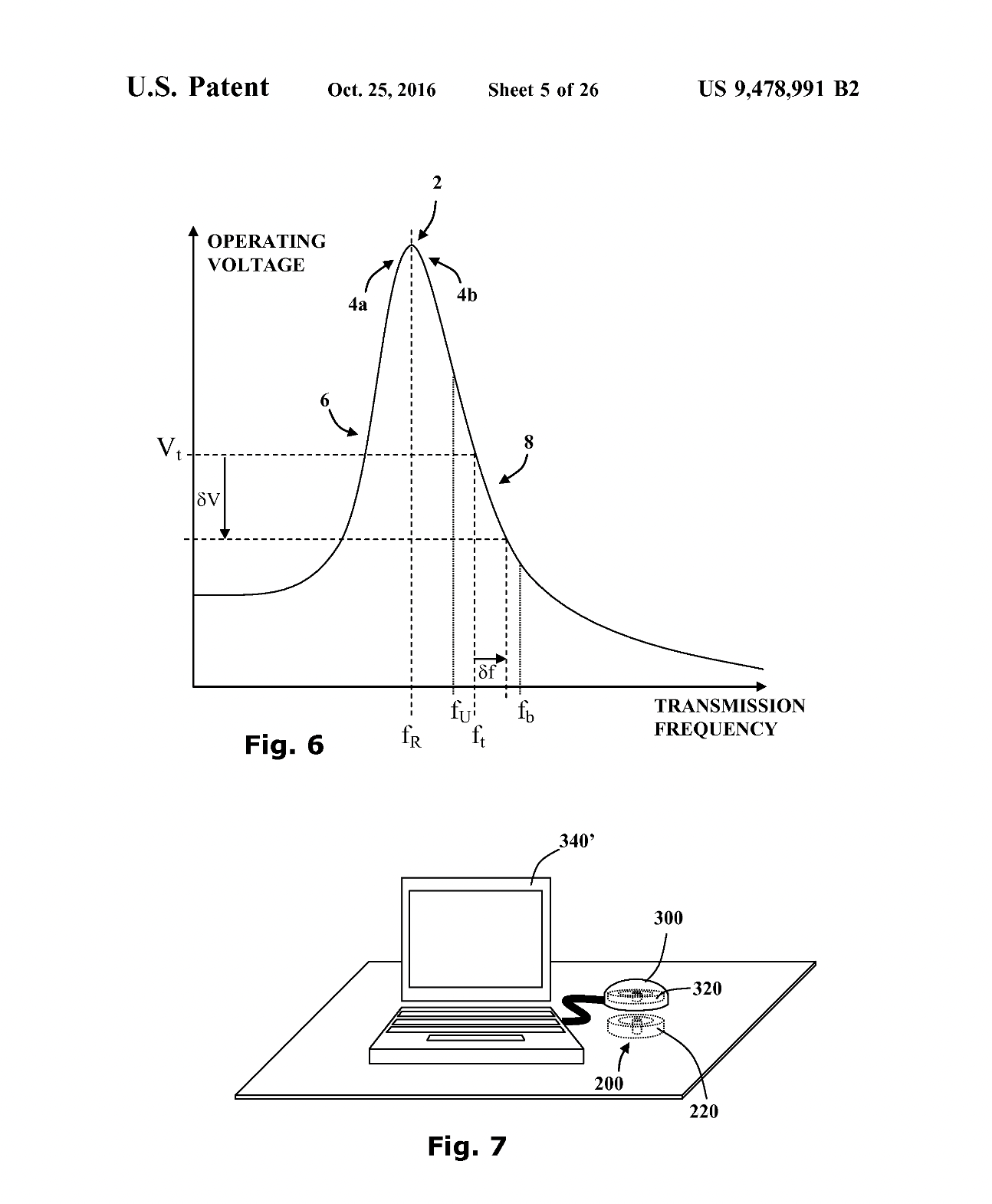

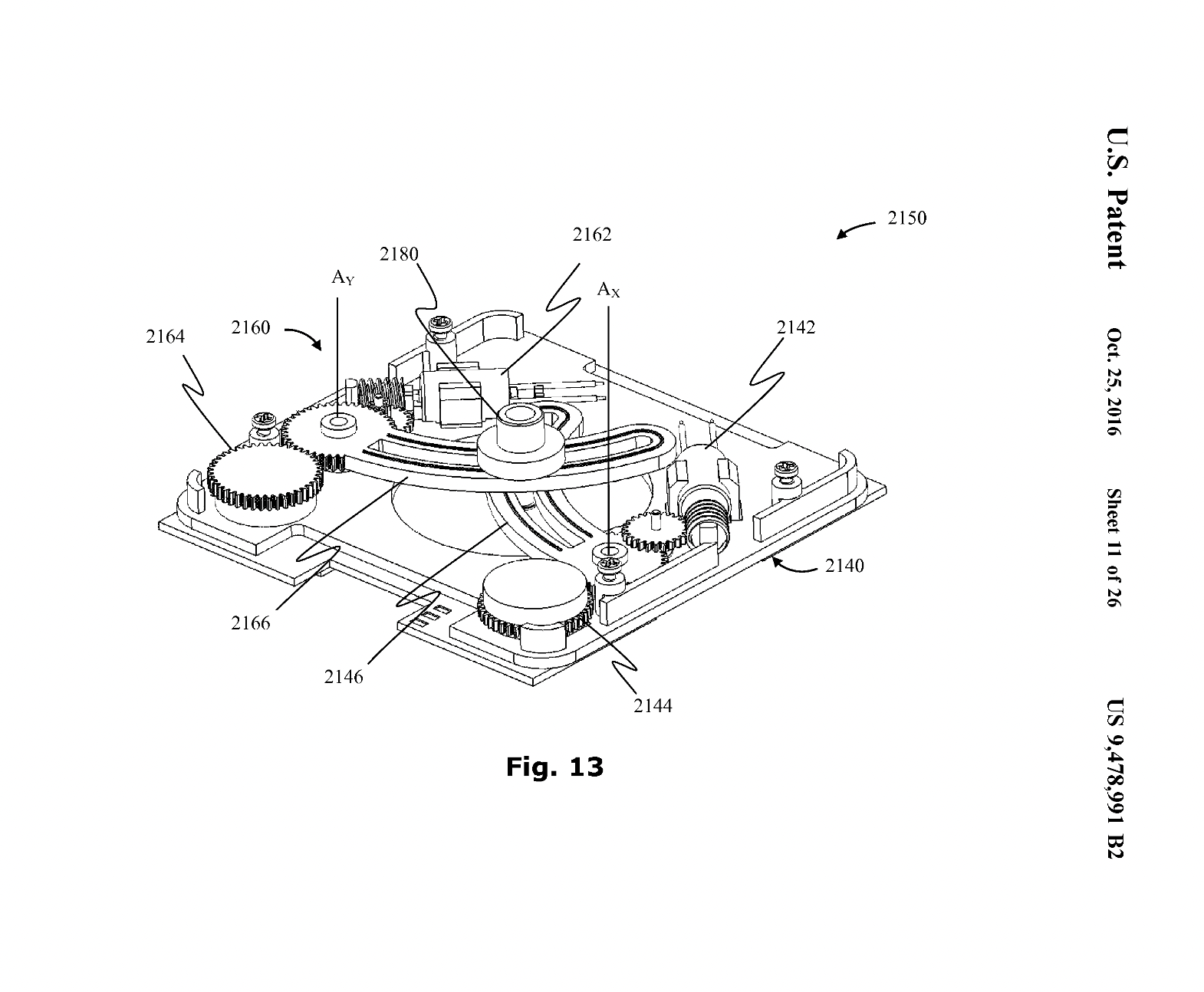

I wrote a Patented! gradient descent search algorithm for wireless power charging solution

I wrote a Patented! gradient descent search algorithm for wireless power charging solution

The base station self adjusts to the user's device

The algorithm was written in C, firmware using under 64 Bytes of RAM

The base station self adjusts to the user's device

The algorithm was written in C, firmware using under 64 Bytes of RAM

I helped this guy build an Accordion Video Controller

An accordion that controls the playback of the video, where each key picks a different performance-clip to play, while the direction and speed of the video playback is controlled by the accordion's bellows.

I made a Visualisation of Wikipedia articles

Using advanced dimensionality reduction and text analysis techniques in order to create a two dimensional semantic map of Wikipedia terms. Each term was represented by an individual pixel color-coded by how contentious the topic was.The “controversiality measure” is extracted by carefully analyzing the revision history of each term in order to identify combative erasing and rewriting, indicative of a highly disputed topics.

I made a Markov-Model Automatic Video Editor

"Movie Weaver" is an automatic video editor that utilizes the power of probabilistic models along with filmmaking paradigms to turn a collection of video clips into a movie. Note the smooth color transition of a optimized sequence of clips: compared to a random sequence:

compared to a random sequence:

For more details read the book or see

the

poster

For more details read the book or see

the

poster

I developed and taught a Workshop for making motion-games using Depth Camera and Unity

Watch a show reel the results of 5-hours session: The workshop was held at:

Co-organized of "INFECTED" Hackathon at

the "Center of Design and Technology".

I am currently self-employed doing freelance work.

Patent #9,478,991 System and method for transferring power inductively over an extended region

An inductive power transfer system operable in a plurality of modes comprising an inductive power transmitter capable of providing power to the inductive power receiver over an extended region. The system may be switchable between the various modes by means of a mode selector operable to activate various features as required, such as: an alignment mechanism a resonance tuner, an auxiliary coil arrangement or a resonance seeking arrangement. Associated methods are taught.Patent #9,459,758 Gesture-based interface with enhanced features

A method includes presenting, on a display coupled to a computer, an image of a keyboard comprising multiple keys, and receiving a sequence of three-dimensional (3D) maps including a hand of a user positioned in proximity to the display. An initial portion of the sequence of 3D maps is processed to detect a transverse gesture performed by a hand of a user positioned in proximity to the display, and a cursor is presented on the display at a position indicated by the transverse gesture. While presenting the cursor in proximity to the one of the multiple keys, one of the multiple keys is selected upon detecting a grab gesture followed by a pull gesture followed by a release gesture in a subsequent portion of the sequence of 3D maps.Patent #9,377,865 Zoom-based gesture user interface

A method includes arranging, by a computer, multiple interactive objects as a hierarchical data structure, each node of the hierarchical data structure associated with a respective one of the multiple interactive objects, and presenting, on a display coupled to the computer, a first subset of the multiple interactive objects that are associated with one or more child nodes of one of the multiple interactive objects. A sequence of three-dimensional (3D) maps including at least part of a hand of a user positioned in proximity to the display is received, and the hand performing a transverse gesture followed by a grab gesture followed by a longitudinal gesture followed by an execute gesture is identified in the sequence of three-dimensional (3D) maps, and an operation associated with the selected object is accordingly performed.

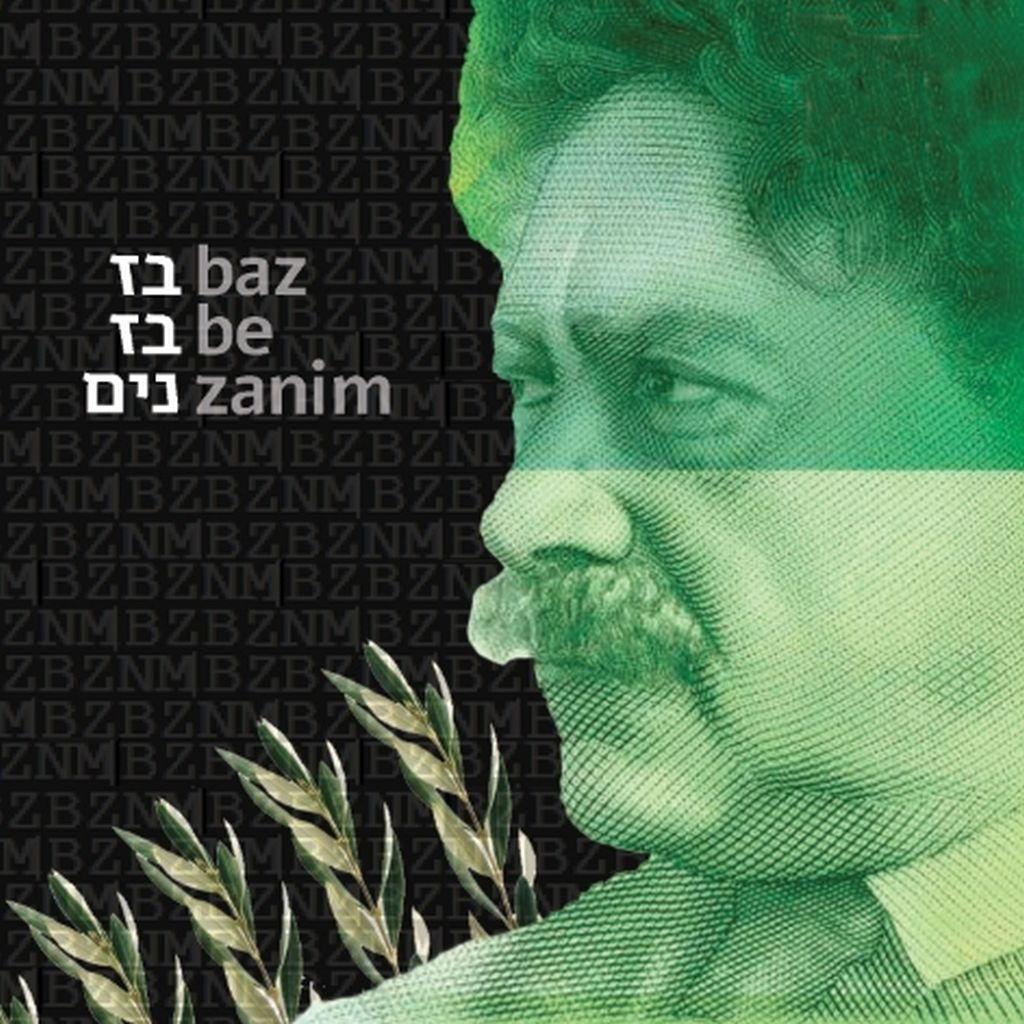

Recorded album of songs and covers on one night at Studio-one, July 20, 2004

-- Listen!

Recorded album of songs and covers on one night at Studio-one, July 20, 2004

-- Listen!

I some made gifs (before it was cool):